A Challenging And Worthwhile Objective

Last week ENCODE hosted a three-day Research Applications and Users Meeting for the broader research community. The Encyclopedia of DNA Elements (ENCODE) Consortium is a global collaboration of research groups funded by the National Human Genome Research Institute (NHGRI). Members of the ENCODE Consortium are addressing the challenge of creating a catalog of the functional elements of the human genome that will provide a foundation for studying the genomic basis of human biology and disease.

For Phase 3, the ENCODE Consortium project is using next-generation technologies and methods to expand the size and scope of catalog content created in earlier phases. ENCODE’s Phase 3 analysis will require approximately millions computing core hours and will generate nearly one petabyte of raw data over the next 18 months. It is hardly surprising then, that in addition to keynote lectures and presentations by distinguished speakers, the ENCODE 2015 meeting agenda was packed with hands-on workshops and tutorials designed to provide attendees the knowledge and skills they will need to access, navigate, and analyze ENCODE data. As announced last week, the ENCODE Consortium’s Data Coordination Center (DCC), located at Stanford University, has adopted the DNAnexus platform, enabling virtually unlimited access to data and bioinformatics tools in support of Phase 3 research.

Seamless Live Demo

Many large-scale genomics studies have been limited by the lack of required computational power and collaborative data management infrastructure. The power of the DNAnexus platform was demonstrated firsthand when roughly 150 ENCODE workshop attendees, using the DNAnexus platform, launched the RNA-Seq processing pipeline in unison on a sample dataset. While the ensuing demand for data retrieval and processing would bog down a typical institutional computing resource for hours or days, possibly “freezing out” any additional user requests during that time, the cloud-based DNAnexus platform simply scaled, as usual, to meet demand. Every workshop participant’s workflow was processed at full speed.

More Than Scale

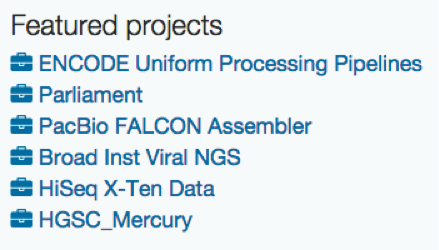

Although a scalable solution capable of processing thousands of datasets was a key requirement for the DCC, it was not the only factor in its decision. The development of version-controlled ENCODE pipelines is a priority in the current phase of the ENCODE project to ensure that data released to the public are consistently processed. Tasked with centralizing the project’s raw sequencing data with uniform metadata standards and bioinformatics analysis, the DCC will also take advantage of the DNAnexus platform to supply the Consortium with a secure and unified platform already connecting thousands of scientists around the world, and to provide transparency, reproducibility, and data provenance for consistency amongst ENCODE pipelines and results. Stanford has open-sourced the ENCODE pipelines on GitHub, and they are also available in a public project on the DNAnexus platform, along with other public data and pipelines.

DNAnexus is proud to support Stanford University, the ENCODE DCC, which serves as a data warehouse and processing hub for the ENCODE project. We believe cloud-based solutions for genome projects will have a blockbuster impact on accelerating genomic medicine.

.png)

.png)

.png)